Claire Blackshaw

I’m a queer Creative Programmer, with a side dish of design and consultancy, and a passion for research and artistic applications of technology. I work as a Technical Director at Flammable Penguins Games on unannounced title.

I've had a long career in games and I still love them, also spent a few years building creative tools at Adobe.

Love living in London.

When I'm not programming, playing games, roleplaying, learning, or reading, you can typically find me skating or streaming on Twitch.

Latest Video

Lazy Sunday: Feedback Screens, Viewport Bugs, and Giving Back to Godot

TLDR: After shipping Augmental Puzzles on Meta (Feb 3rd) and Steam (Feb 13th), I spent a lazy Sunday porting our XR screenshot capture code back to Godot main as a PR. The feature was born from wanting a Subnautica-style in-game feedback screen — capture the full eye view, attach it to a mood-tagged bug report, send it to our ticketing system. Godot's OpenXR viewport capture was broken, so we went deeper and hooked into the compositor's blit pipeline. Now it's a PR that works on both Vulkan and GLES. Passthrough privacy is a bonus freebie.

It's Sunday, a lazy Sunday. The rain is beating down on England because it's wet as always. Yesterday — Valentine's Day — was extremely sunny, and I have to say my mood has been very bright after the initial release of Augmental Puzzles on the Meta platform on February 3rd and then the Steam release on February 13th. Super excited about both.

Augmental Puzzles launched on SteamVR on February 13th — cutting it close for Valentine's but we made it.

There were definitely things we missed, things I'd like to get in there, but the rush to the finish on Steam was way more chaotic than I'd like to admit.

The truth is we had a personal upset in that one of our team members had a missing cat situation, which distracted a lot of energy. Because people and pets are more important than video games. At the end of the day, it is just a game — even if it is a business — so pets and loved ones come first.

So that took some energy away, but the real thing I want to talk about — and the reason we were coming in so hot at the end — was to do with a Godot bug. Or one could say, a missing feature.

The Subnautica Feedback Inspiration

One of the differences between the Meta release and the Steam release is a feedback feature. We really wanted to get this in for the Meta release, but it didn't quite make it. It's now in both versions.

Side note — I met Charlie at, I think it was GDC Europe before Gamescom had their own Devcom or whatever it was. It's gone through so many iterations. But I remember doing a talk there and afterwards sitting down for a lunch slash coffee with Charlie and just talking about board game design. Having a fantastic chat. It's so wonderful when you meet people who are clearly passionate about what they do and willing to talk about the craft.

We didn't talk about any business stuff really, we were just talking about the craft. You've got to remember, at the time procedural generation was very much in vogue. So when Charlie pitched me the game that would become Subnautica — explaining that they were going to build the entire environment by hand — it was quite unique. I've respected his entire design sense since and followed his work closely.

One of the innovations that the Subnautica team brought to their early access — which was one of the breakthrough early access titles in those early days — was a feedback screen directly in the game.

It's become more common now, this idea that you want feedback from the people who are actually playing the game. Not someone who's spending their entire life on Twitter or Reddit or your personal Discord. You want to make it as easy as possible for the people enjoying the game to tell you: hey, I found a bug. Or hey, I have some feedback about X.

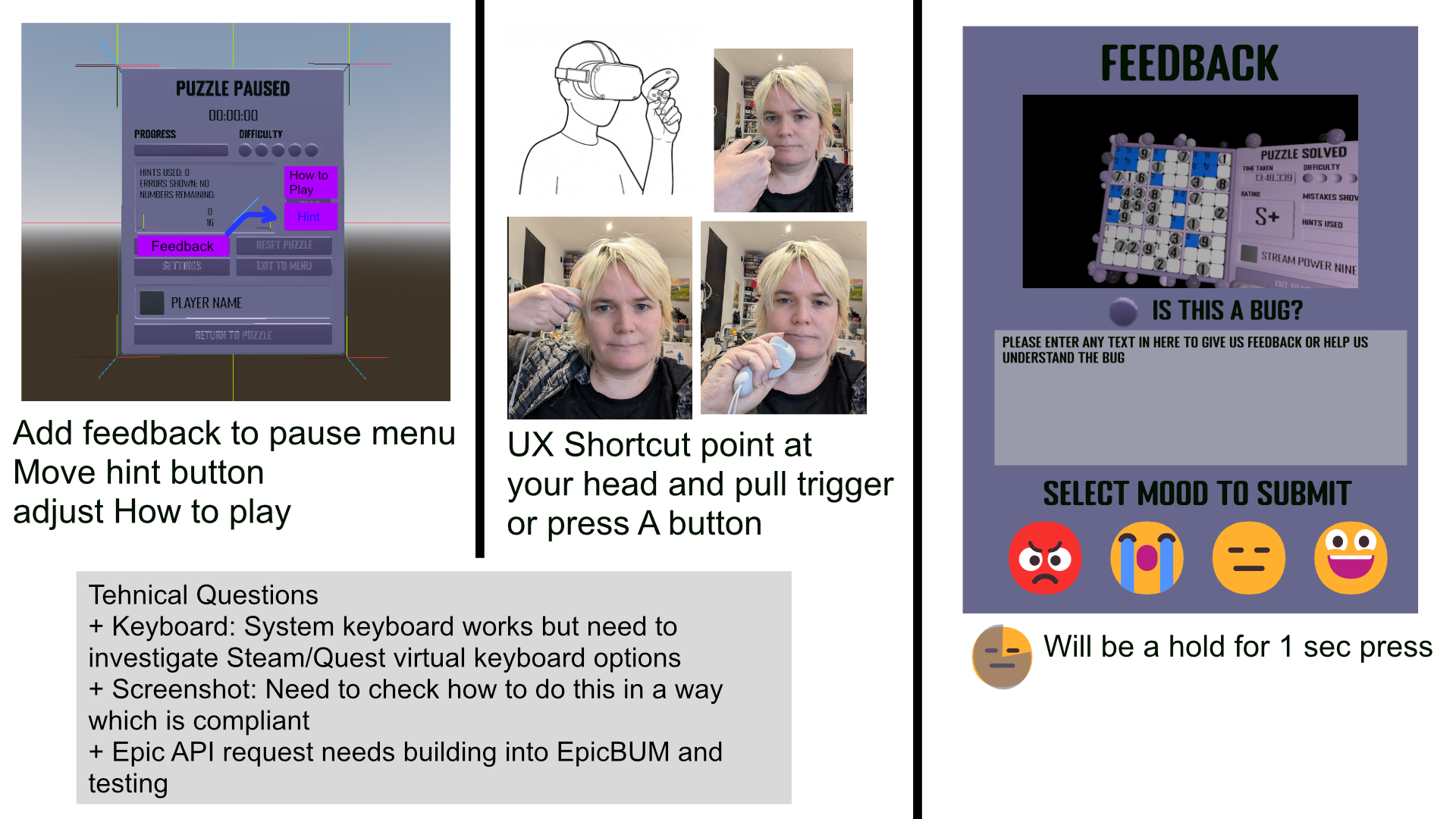

The thing that Subnautica did very well is when you open that feedback screen, it captured a screenshot of what you were currently seeing, presented you some basic options for giving feedback, and the submission button — instead of being a plain old submission button — was an emotional state. Which really quickly allows you to bucket content into "this is bringing someone joy," "this is bringing someone rage." Because sometimes a bug is quite delightful. Or it's just a neutral situation. Those emotional sentiment buckets are a very good first-line ordering system, because sometimes the stuff that brings people joy is as important as the stuff that brings people rage.

Early design rough — the pause menu gets a feedback button, pointing at your head is the shortcut, and mood emojis replace the submit button. The technical questions at the bottom were very much on our minds.

The VR Screenshot Problem

Of course, for this to work, you have to be able to capture a screenshot. And as we know, VR is a very performance-heavy platform with a lot of challenges.

One of the biggest is that we tend to have multiple viewports or views — one for each eye, sometimes in foveated situations it's actually four render targets or more. But assume we're dealing with the classic two-eye situation.

The reality is that what you're seeing in the headset typically is not well represented in the desktop view. Specifically, in the Godot implementation, it has the classic crop-at-the-top problem that most engines have by default. It crops to 16:9, maybe centres it, whatever. But we tend to know in VR that people's dominant view depends on the game, but generally is actually below the horizon line — especially because the headsets are a bit heavier, drag you down, and tend to make you look down.

The 16:9 crop, if you capture it, is throwing information away. Which could be key for diagnosing the bug or seeing what the player's seeing. Maybe it's at the corner of their vision. Maybe there's something you're missing.

So our first problem was that we didn't just want what's on the viewport. Though, because we were in a rush trying to get the Steam version out, we were happy to just grab the viewport texture.

Well, it turns out there's a problem there that's unique to OpenXR. The OpenXR team does have a PR to potentially fix how the rendering server sets up the framebuffer render target, because currently it doesn't go through the rendering server correctly. It's not wrapped properly, which means a lot of the capture-viewport code that you'd use outside of VR doesn't actually work in VR at the moment.

So there was our first problem. I admit we lost a day or two trying to track this down, thinking it was one of our bugs, thinking "this is such a basic feature, what the hell" — losing time as you do when you hit one of these.

Then I got talking on the XR channel to the other maintainers and was told about the situation. I was pointed to the PR, which was not yet merged and targeted a much newer version than our engine fork is based off. I was a bit concerned that changing our framebuffer could introduce performance headaches this late in the game. So I was quite nervous of that approach.

Into the Compositor

I dug into the code and thought: well, we know we're blitting to the screen because I can see it. So we definitely have a route in there.

That led me to the render compositor and how we do the post-frame blit behaviour. This immediately ticked my brain. In future versions of Augmental Puzzles, I want to put in a better stabilised spectator camera — optionally turned on, because it will cost performance — to give people who are streaming or recording a more pleasant traditional video presentation. I've been experimenting with using traditional video frame stabilisation techniques to remove the head jitter without re-rendering the frame, using knowledge of how the head moves to adjust. But anyway, future things.

The good news was I'd located the code, understood the blit pipeline, and it was pretty doable to quickly put in another option where, in the XR interface, you could trigger a screenshot capture. It would wait until the appropriate post-draw moment — no timing synchronisation issues — send it through to the blit pipeline, and write it to the provided graphics resource, the texture RID that you owned and had set up. Lifecycle was your management.

That signal-process pattern mapped really cleanly, and I was quite happy with it.

I was also chuffed that, because of how it works and some of the other work the Godot rendering team has done, we've got really good methods of asynchronously grabbing that texture data, using the WorkerThreadPool to offload the saving and JPEG encoding. So making the file takes processing power and time, but it shouldn't hitch the frame. It shouldn't be a blocker.

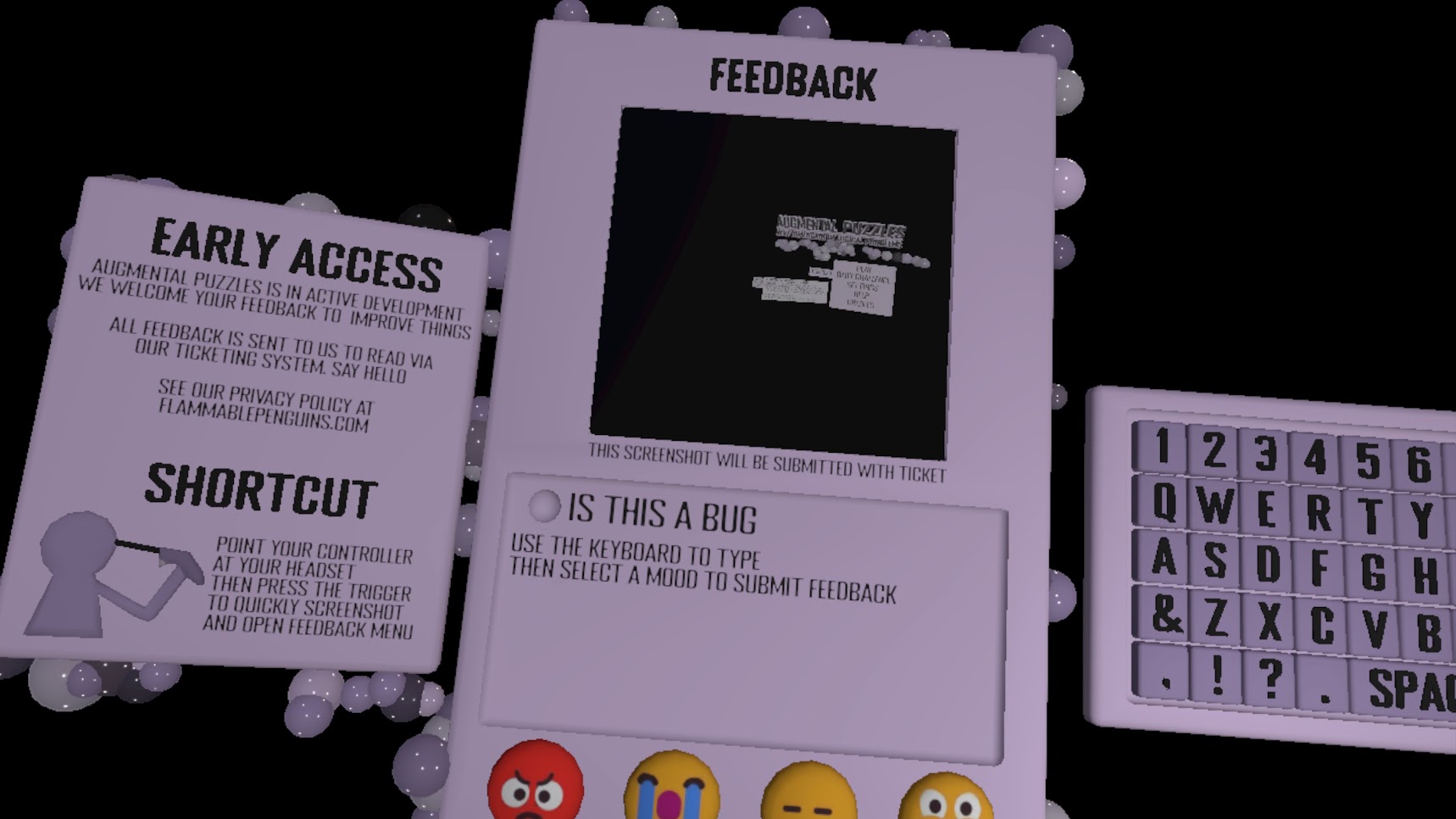

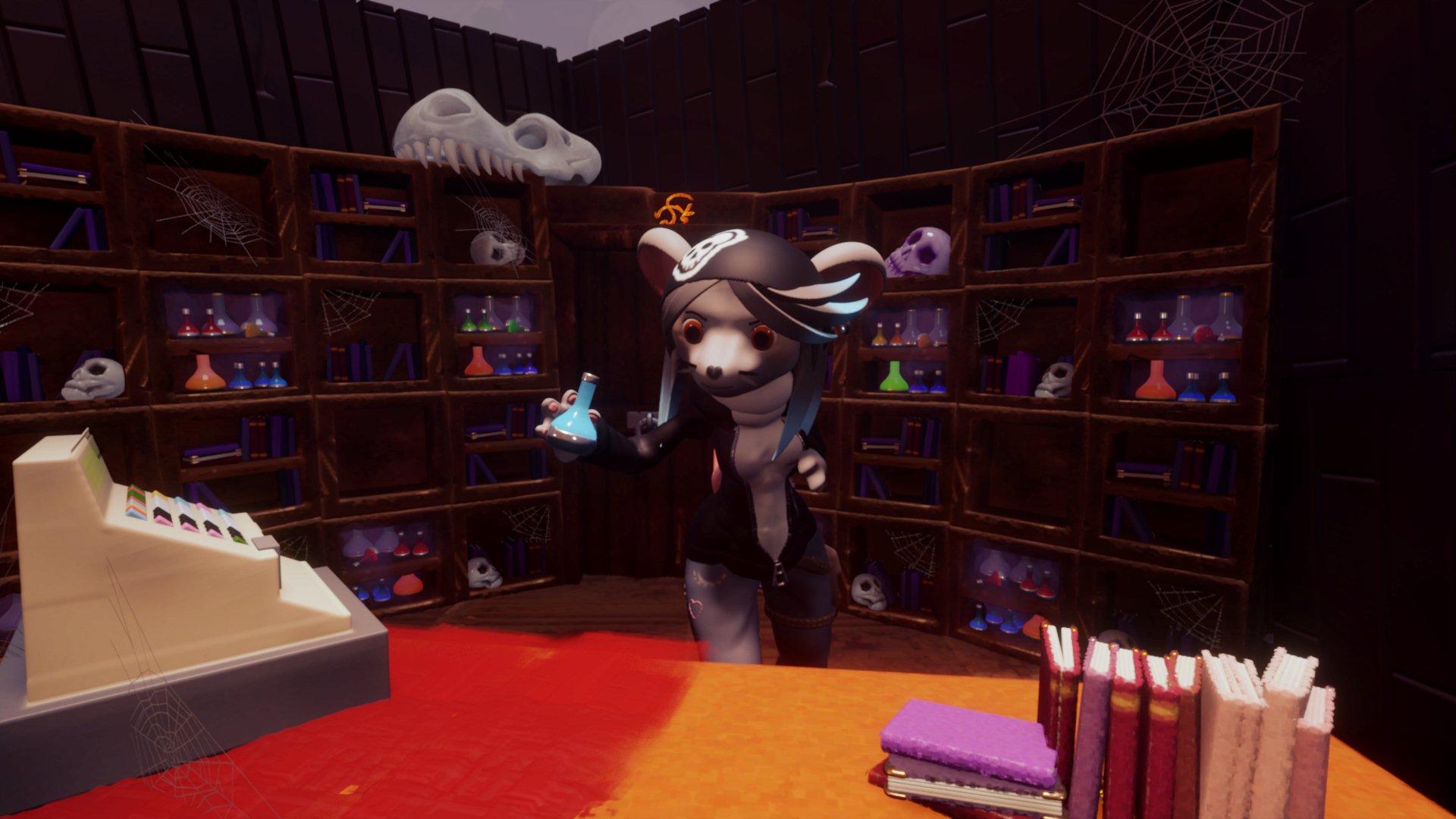

The final feedback screen in-game. Full left eye capture displayed in the frame, virtual keyboard for text, and mood emojis to submit. The early access notice and shortcut instructions are on the side panel.

We now get the full left eye in that screenshot. Bonus points: because of how it's done with passthrough, we don't capture what people see through their passthrough — just our game view. So we don't have privacy concerns there. Which is, again, fabulous.

Porting It Back to Main

One of the principles I want to maintain — it's not always possible, and it hasn't always been — is to share these kinds of little fixes and work back to the main branch.

So I took a bit of a lazy Sunday and did some of that work. The problem was that Godot main is a little bit ahead of where our engine forked off. Merge conflicts, slightly different behaviours. And when I originally wrote it, I wrote exactly what we needed — much quicker and easier because I knew we were using Vulkan, so we could just do the Vulkan bit.

For the PR, I had to make a GLES version for OpenGL, not just the RD compositor stuff. So I did that, fleshed it out, had to write some documentation, made a little demo project so people can see how I envision it being used, wrote the docs, and put it all together.

And yeah, I'm really chuffed with how this has all gone. The feedback screen is in Augmental, we have all that pipeline working, and I think it'll be a really strong tool going forward. Feedback comes through directly to one of our systems and we can view it instantly. Fantastic.

It just feels really good to take something small like this — not a big chunk of work to bring back to main — knowing it will make some people's lives a little bit better. If you want to do a feedback screen of your own in your own Godot game, or you just want better VR screen capture, well, you've got the option now. And if you want to spend a little extra frame time converting from linear to sRGB, it's there as an option.

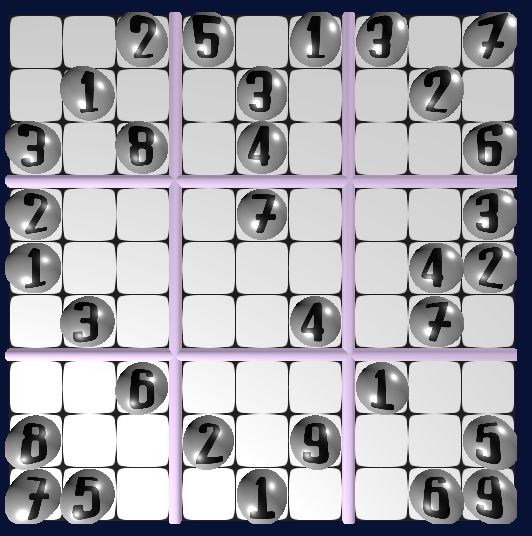

The Valentine's Day daily challenge I hand-set for Feb 14th. This is a great example of why hand-crafted puzzles are worth the effort — you can do things that procedural generation simply can't.

What's Next

We've got a lot ahead with Augmental Puzzles. I feel like that's all I'm talking about these days, but forgive me — that really is my life right now, and it feels so good to be able to finally talk and share the work we're doing.

PRs usually take a while to go through review and get merged, so don't hold your breath. But it's there, I've done the work, and I can get back to my stuff.

I hope to share more of what I'm working on in more detail now that I can be more public about the project.

Anyway, I hope you had a good Valentine's, I hope you enjoyed that one day of sun we got, and that you're inside and dry for today. Toodles.

Links

- Augmental Puzzles on Steam

- Augmental Puzzles on Meta Quest

- Godot PR: Capture XR to Texture

- Tech Toolbox: Subnautica's Feedback System — Great feedback talk on Subnautica talk

Current Hobby Projects

Recent Hobby Projects

-

VR Physics Game

Networked Physics VR game -

TextDB

Text log file based Key Value Database -

LLM ADHD Friend

My experiments in LLM Assistant -

Website Update

Doing a big refresh on the website -

Skate All of August - 2020

Skate & Film Challenge -

Dreams Tutorials and Shorts

Education Series -

Game Dev | Null

True Stories from Making Games -

Raycaster

Simple playground to try some Raycaster coding -

InkPub

Web Writing and Publishing Software -

UXPlayground

Playground to try some UX ideas out on

Social Bits and Bobs

Website: Claire Blackshaw

Flammable Penguins: Small Press Publishing

Mastodon: @kimau@mastodon.gamedev.place

BSky: @EvilKimau

Twitter: @EvilKimau

YouTube: YouTube

LinkedIn: Claire Blackshaw

Twitch: Kimau

Github: Kimau

TikTok: @EvilKimau

Tumblr: Forest of Fun

Book list: Good Reads